Beyond rational persuasion: how leaders change moral norms

Authors: Dr Chalres Spinosa, Matthew Hancocks, Haridimos Tsoukas, Billy Glennon

Abstract

Scholars are increasingly examining how formal leaders of organisations change moral norms. The prominent accounts over-emphasise the role of rational persuasion. We focus, instead, on how formal leaders successfully break and thereby create moral norms. We draw on Dreyfus’s ontology of cultural paradigms and Williams’ moral luck to develop our framework for viewing leader-driven radical changes to norms. We argue that formal leaders, embedded in their practices’ grounding, clarifying, and organising norms, get captivated by anomalies and respond to them by taking moral risks, which, if practically successful, create a new normative order. We illustrate the framework with Churchill’s actions in 1940 and Anita Roddick’s Body Shop. Lastly, we discuss normative orders, when ordinary leaders change norms, evil, and further research.

An extensive list of our source material for this paper is available here.

Introduction

In Beyond Good and Evil, Nietzsche noted that the primary act of leadership is to create values. This theme is ancient and modern. Plato recommended “philosopher kings” who would understand the good and create a just polis. Weber stressed the “inner determination and inner strength” of the charismatic leader, who “demands recognition and a following by virtue of his mission”. For Barnard (business leaders instilled in organisations non-economic values to give followers “faith in the integrity of the common purpose.” Finally, Selznick claimed a leader’s main task was the infusion of organisations with values, thus, turning organisations into institutions. For early institutionalists, values were moral: they indicate desirable ends, carry “a normative weight,” and incorporate a “moral imperative”. In organisations, moral values give rise to moral norms – i.e. influential standards of good behaviour – which together constitute normative orders.

Most leadership scholarship (see source material) has shown only limited interest in moral norm creation. However, researchers are rediscovering the importance of value-infusion in organisations and, to a lesser extent, leadership’s role in it. Thus, some management scholars are now examining how norm change happens.

Despite this progress, three main problems persist in understanding how leaders make such change happen. Firstly, with the rising appreciation of leadership as distributed and shared, norm change is increasingly viewed as emerging incrementally through a complex, dynamic process of mostly discursive micro-interactions among teams. While welcome as a corrective to excessively individualistic, top-down models of leadership, the shift to bottom-up norm change has obscured the critical role of formal leaders in changing norms. Because of the authority invested in formal leaders and the resultant asymmetry in interactions between formal leaders and followers, leaders can act according to new norms and inspire support from followers. Thus, there is a need to focus on the formal leaders’ role in changing norms.

Second, while some scholars (see source material) have looked at leader-driven rule-breaking, most have ignored rule-breaking as a cause of norm change. Moral entrepreneurship theory researchers see new norms arising from moral judgments made in places where no dominant norms obtain. These researchers see creating norms as a developmental process, contingent on the sophistication of leaders’ moral reasoning. However, moral reasoning fails to cover the cases where leaders undertake morally risky actions that break with the prevailing norms and that are, therefore, difficult to justify a priori.

Thirdly, in addition to its affective charge, rule-breaking sometimes involves acts of symbolic, emotional, or physical violence, as in sacrificing troops, civilians, top employees, or operating units for the sake of morally and practically valuable purposes. Such rule-breaking with its radical norm change involves significant risk for formal leaders undertaking it.

We address these gaps here. We examine how formal leaders, in the face of moral anomalies that defy current moral reasoning, change norms by undertaking morally risky rule-breaking actions with the complicity of followers. Drawing on existential and moral philosophy, especially Dreyfus’s account of Heidegger’s cultural paradigms and Williams’s account of moral luck, we extend the understanding of leader-driven norm change. We argue that courageous, morally sensitive leaders, whom we will focus on here, are drawn to moral anomalies, whose practical resolution requires a radical change in norms and, ultimately, the normative order of their organisations or communities. Such leaders respond to anomalies by taking moral risks in varying degrees where they engage in shocking actions that, when practically successful, become new norms. Typically, these leaders first take moral risks that question the current normative order; then they take moral risks that shock; finally, they take more shocking but articulable moral risks that, if practically successful, establish a new normative order.

In the next section, we review the literature on leaders driving norm change, position our argument, and state our research question. Following the literature review, we present our theoretical framework and then apply it to reveal Churchill’s bold, rule-breaking actions to replace the British normative order. Then we apply the framework to show Roddick’s more common, rule-breaking actions to replace the normative order of her company and displace that of the beauty industry. We close by discussing the enduring and malleable nature of normative orders, the nature of moral-risk-taking by leaders less visionary than Churchill or Roddick, the question of evil associated with moral-risk-taking together with why leaders take moral risks rather than simply trying to persuade people, and then our suggestions for further research.

Research review

In this section, we review relevant research on the role of leadership in changing norms, to acknowledge contributions, spot gaps, and point out conceptual problems. Specifically, we will briefly review and critique the three main streams of research exploring leadership and changing norms: moral entrepreneurship theory, practice-based leadership, and institutionalism. Although these are distinct and often unrelated streams of research, each deals with leadership and norm change, and it is, therefore, important to explore the core arguments and assumptions made.

Moral entrepreneurship theory

Moral entrepreneurship theorists, working within the ethical leadership research tradition, usefully note that ethical leaders go beyond doing the right thing to creating new norms. Accordingly, ethical leaders spot opportunities for moral entrepreneurship, articulate a vision of moral change, and seek to gain power to influence followers to adopt the new norms. Moral entrepreneurs exploit “moral voids”, situations in which there are no shared, adequate moral standards. Moreover, moral entrepreneurship is positively shaped by agents’ moral awareness, moral development, and moral identity. In short, moral entrepreneurs have a more refined insight into moral requirements because they see the moral side of issues that others miss. Additionally, moral entrepreneurs persuade others of the appropriateness of the norms they advocate. Thus, persuasion is the core of leaders’ moral entrepreneurship.

Researchers recognise that moral entrepreneurs “face risks” in their efforts to change norms but do not explore how moral-risk-taking contributes to making new norms or how it influences adoption. Moral entrepreneurship theory does not address norm change that runs against prevailing “normatively appropriate conduct”. Accordingly, the main outcomes of moral entrepreneurship are the creation of a “better society” and earning “the trust of stakeholders”. Such outcomes presuppose that it is already known what makes a good society and what stakeholders will trust. In many morally exigent circumstances, neither is known in advance. Should we expect contemporaries to trust a Lincoln who tells an equivocating lie to mislead Congress (about the status of peace negotiations) in order to gain passage for the amendment abolishing slavery? Similarly, should patients and leaders of low-income countries trust a pharmaceutical company’s leader who refuses to give away the licence for COVID-related vaccines? Should investors trust the leader who does? The rationalistic models of moral entrepreneurship do not take up leaders’ moral-risk-taking.

Practice-based leadership

Scholars (see source material) studying practice-based, distributed, and shared leadership see leadership neither in individual traits nor confined to dyadic leader/follower exchanges. Rather, leadership is viewed as a group’s practice involving interactions, mediated by discursive-material means, whereby members seek to coordinate their efforts. Such a view usefully highlights leadership’s interactively produced achievements. Accordingly, leadership bubbles up moment by moment in various quarters and levels of the organisation and is largely improvisatory. Improvisation, with its emphasis on flow, creates variations on themes but not radical changes in course. Research typically focuses on the conversational turning points that drive inflections in norm change.

Practice-based leadership research also tends to take a “thin” view of practice: what people simply do and say in interactional situations without considering the moral ends driving action. The normativity of practice is underplayed. Consequently, the thin view of practice obscures both the social embeddedness of human agency and the “moral dimension of practice” (especially the underlying norms guiding the life agents pursue. A “thicker” conception of practice is necessary to appreciate change in norms where strife and rule-breaking replace conversational turning points.

Institutionalism

Since the work of Selznick, institutionalists have examined how leaders change organisations’ norms. However, institutionalists are vexed with the paradox of embedded agency: if leaders, embedded in institutions, tend to preserve or adapt institutional norms, how could they come to introduce radical change? Thus, institutionalists mostly examine adaptation. Nevertheless, Garud et al noticed that some leaders-cum-entrepreneurs break with norms and then gain legitimacy. Following that insight, institutionalists investigate how leaders find opportunities legitimately to break and create norms.

Generally, those opportunities appear when conflicts between competing logics emerge or when existing moral frames are transformed to accommodate alternative frames. Examples include organisational values of power and duty conflicting with institutional values of collectivism and novelty, the conflict of profit maximisation and risk minimisation opening a space for rogue trading; an intern persuasively reframing the nature of the hardwood industry’s work from extracting needed resources to sustaining its business. In general, for institutionalists, leaders change norms through rational persuasion made possible by leaders taking advantage of contradictions. Institutionalists tend to refrain from examining radical-norm-change cases that do not involve rational persuasion.

While scholars across the three research streams offer significant insights, leader-driven, radical, rule-breaking norm change draws little attention. Only a few researchers adduce leaders who break established norms for the sake of an end that they think best serves their institution.Exploring leader-driven, radical norm-breaking and, through it, the creation of new norms is important for two reasons.

First, we seek to understand what beyond rational persuasion is going on when formal leaders take risky actions that go against the tide, are initially little understood by their followers, and yet enable the leaders to establish new normative orders. Second, insofar as radical norm change hinges on risk-taking, such change requires moral luck, which, excepting Michaelson and Horner (see source material), has not been adequately theorised and has received little exploration in the literatures. Moral luck is highly relevant to leader-driven, norm-breaking actions since their success depends on circumstances beyond the leaders’ control. Such risk-taking becomes more pronounced when leaders face “wicked” problems. Yet, our understanding of leaders’ moral luck remains underdeveloped. What justifies a leader taking a risk whose outcome depends on moral luck?

Our review shows that to understand leader-driven, radical change of norms properly, we must acknowledge the “embedded” nature of leader-driven agency and investigate the open-endedness that enables moral-risk-taking and moral luck. Thus, our research question is: How do leaders, embedded in practices, bring about radical change in norms among followers through undertaking morally risky actions? Our argument speaks to all three literatures, especially moral entrepreneurship theory, in that we show that moral entrepreneurs interacting with their organisations need not be rationally persuasive to achieve norm change. Moreover, our “thick” conception of practice with an open texture enriches the practice-based and institutionalist perspectives.

How leaders change norms radically: a theoretical account

In this section, we develop a theoretical framework for how leaders effect radical change in norms by undertaking morally risky actions to resolve anomalies. We draw on Dreyfus and Heidegger’s existential phenomenology and on Bernard Williams’ moral philosophy. As an overview, we draw on Dreyfus’ account, inspired by Heidegger, of how leaders, as cultural paradigms, at an abstract level drive norm change. Then we burrow down by drawing on Williams to understand how morally risky, shocking actions leaders take make the norm change happen.

Practices and norms: how they work and change

For Heidegger, we human beings are constituted out of our shared practices – i.e. ways of acting – for coping with ourselves, people, and things. In addition to sense-making practices, our practices include norms – behavioural guides to doing the right thing. The various norms we live by are co-ordinated by an overall organising norm. The organising norm co-ordinates other norms, especially when they are in conflict. Dreyfus illustrates organising norms with a caricature of how American and Japanese mothers interact with their babies. Following a self-assertive organising norm, American mothers encourage their babies to express their desires, while Japanese mothers following a harmonising norm interact to calm their babies. Such organising norms are the lynchpins of normative orders. Three critical insights follow.

First, the norms of a community tend to meld to support organising norms. For example, norms such as abiding by an honour code drive everyday comportment; managers feel compelled to act honourably in new situations.

Second, norms tend to manifest their organising norm in cultural paradigms that exemplify what makes sense and what is right to do. Typically, these paradigms are works of art (e.g., Sophoclean tragedy), the words of a thinker (e.g., Descartes), religious sacrifices (e.g., Abraham’s Isaac), or, importantly, the founding acts of a leader. When a culture has a paradigm that expresses its organising norm, everything exists more intensely. See source material.

Third, aside from its organising norm, a culture’s other norms come in two complementary and opposing kinds. There are grounding norms that tell us what matters, and there are clarifying norms that tell us explicitly how to see things and what to do. The grounding norms govern what is unquestioned – taken for granted – in a community’s way of life and, thus, provide a ground for the clarifying norms. For instance, in the US, libertarian clarifying norms for acting on rights depend on grounding communitarian norms of neighbourly give and take. The grounding norms determine how far to take the rights. The tension between clarifying and grounding norms is stabilised by the organising norm. Thus, the US Constitution stabilises the tension between libertarian and communitarian norms by setting up the organising norm of debate that reveres precedent. US courts, assemblies, and even family discussions follow this norm. Altogether, clarifying norms, grounding norms, and the organising norm make up the normative order of a practice. Thus, for Heidegger, there is no paradox of embedded agency: we are embedded within conflicting norms that are only weakly stabilised by an organising norm manifested in a cultural paradigm.

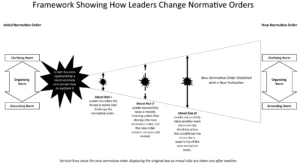

How do normative orders change radically? Drawing on Dreyfus and his associates (see source material) we describe five key moments of radical norm-change (see Fig. 1). First, there is a prevailing normative order where people have a clear sense of what is right to do. Second, a leader is captivated by and calls attention to an anomaly within the prevailing normative order. Third, the leader takes a mild moral risk in proposing actions to resolve the anomaly in a way that goes against the prevailing normative order. Fourth, the leader takes, and succeeds at, a shocking action in response to the anomaly, but the implicit norm change is only faintly understood. Fifth, the leader takes, and succeeds at, another shocking moral risk, which is understood; the new normative order arises.

Fig. 1

Dreyfus’s Heideggerian framework does not give the details about how shocking, risky actions create a new normative order and its acceptance. For that we require Williams’ moral luck.

Moral luck: how morally shocking actions yield a new normative order

Bernard Williams, the late 20th century, Nietzsche-influenced moral philosopher writing in the Anglo-American tradition, shows that moral luck matters in making moral evaluations of actions and people. The driver who neglects to repair her brakes and hits someone is morally worse off than the driver who does the same without any casualties (Nagel, Zimmerman and Wolf (see source material) try to treat Williams’ finding as a moral confusion but end up in his position: we have a narrow notion of justice, which says that we are only blameworthy for what we intend, and a non-narrow moral virtue that requires us to take on blame based on consequences. Williams argues that without the moral intuition that consequences matter in making moral evaluations, we would be living in our own heads and not involved with each other, as we are.

Thus, Williams attacks all predominant strains of moral thinking in the West: deontological (following ‘universal-isable’ principles like the golden rule), utilitarian (following the principle of the greatest good for the greatest number, calculated in advance of action), and virtue ethics (cultivating, through habituation, a virtuous character and phronetically acting on the perceptions it generates). Under these views, whether a person is good or evil does not depend on good or bad fortune but, strictly, on her or his good or evil intentions (or habits). If we say that she is morally good, we cannot mean that she found herself by luck doing good things. We mean that she intentionally or through cultivation set out to act in the right way even if, without her negligence, things go awry. Traditionally, misfortune does not make one evil.

Nagel and Williams note that, in the most common cases of successful moral-risk-taking, one, with luck, simply gets a pass. No moral norms shift. However, Williams gives us a slightly fictionalised case of Gauguin who takes a moral risk that harms his family and yet receives gratitude for his art, which itself weakened bourgeois norms (Williams, 1981, pp. 22–26), and thus, in retrospect, made abandoning his family the right thing to do. Following Williams, Gauguin is a failing Parisian artist stuck in a bourgeois marriage with five children. He has a vague sense that his art requires breaking with the bourgeois constraints around him to work in a more primitive, natural setting. He abandons his family, flees to Tahiti, and paints exactly the paintings of the real Gauguin.

Assuming his art required fleeing to Tahiti, did Gauguin do the right thing in abandoning his family to produce his great, moral-sentiment-changing art? Williams and others grateful for Gauguin’s work have the moral intuition that Gauguin did the right thing, even if, as with a leader who harms a few to save many, the family was wronged. In saying that Gauguin made the right decision, one does so with the benefit of hindsight: one is taking account of one’s gratitude for Gaugin’s earthy, erotic, vitally alive paintings. Williams’s point is that, so far as we gratefully accept Gauguin’s new way of seeing things, we are morally complicit with Gauguin and are obliged to say that Gauguin made the right decision. However, had Gauguin not achieved great art, his treatment of his family would have been immoral.

Similarly, leaders facing anomalies take moral risks requiring, at times, morally shocking actions. When these actions succeed – resolve the anomaly and show us a new way of seeing it and the world – we are grateful, complicit, and deem the leaders good. When leaders fail, they are immoral. The shocking, risky actions Dreyfus’ leader takes in response to an anomaly are the morally risky ones of Williams’ account. Because the risks are moral as well as practical, their success makes these shocking actions (or ones like them) a new norm. We will show how the Heidegger + Dreyfus + Williams framework reveals change in normative orders with Churchill’s leadership during 1940, followed by a briefer, and less dramatic illustration of Anita Roddick’s founding of The Body Shop.

Churchill changes the British normative order in 1940

The conceptual robustness of the framework comes out clearly when applied to Churchill’s management of WWII in 1940. We have chosen an “extreme case” of a leader in war to bring out as clearly as possible the sharp dilemmas involved and the risks undertaken by leaders. Churchill’s morally risky actions grew out of and transformed the way Britain’s grounding, clarifying, and organising norms made sense of Hitler. Churchill is one of the most studied leaders, and there is substantial empirical material about him. We will draw on historian Andrew Roberts’s recent account of Churchill, and Churchill’s own account of 1940, which scholars deem factually uncontroversial (see source material).

Prevailing normative order

Churchill and the British leaders, with whom he was in deep tension, reveal the pre-1940 normative order of Britain. Churchill himself manifested the traditional English grounding norms of buoyancy, industriousness, boldness, and, when attacked, fierceness. His eloquence and appeal to non-elites depended on his capacity to resonate with those grounding norms. Churchill was aware of the norms he stood for and that he thought it his job to manifest: “The buoyant and imperturbable temper of Britain, which I had the honour to express, may well have turned the scale” in the war.

His opposites in the Tory Party – Baldwin, Chamberlain, and Halifax – vividly represented the clarifying norms: high-minded judgment and deferential gentlemanliness. These grounding and clarifying norms were co-ordinated by the organising norm of affability, which, when challenged, turned to, affability’s cousin, appeasement.

In the late 1930s and early 1940, appeasement dominated. Chamberlain and Halifax appeased Hitler because high-minded judgment assured them that Hitler was undoing the humiliation of Versailles and that, once accomplished, he would become gentlemanly too. That judgment seemed sensible on the background of English buoyancy. Hitler played brilliantly on this gentlemanly norm. From his March 7, 1936 declaration after German troops entered the Rhineland (“We have no territorial claims to make in Europe”), through his 1938 speech calling Churchill a warmonger, to his “last appeal to reason” speech on July 19, 1940, in which he said that “he had never planned to ‘destroy or even damage’ the British Empire,” it was Churchill who looked fierce and barbaric. Appeasement worked both ways. Chamberlain and Halifax appeased Churchill with attention and lesser government offices. This appeasing normative order held sway until May 9, 1940 when Hitler, having invaded Poland, repelled the British from Norway.

Anomaly

Hitler’s unquenchable thirst for brutal conquest was the anomaly that captivated Churchill. Preceding May 1940, Churchill repeatedly called attention to how the common assumptions got Hitler wrong. Churchill was nearly clairvoyant. In 1936, he saw Hitler’s military spending and declared the Rhineland was “but a step”. Churchill also predicted the invasion of Austria, Czechoslovakia, and the USSR. Churchill judged Hitler as barbaric, mendacious, and responsive only to power. Roberts speculates that Churchill could get a grip on Hitler because of Churchill’s 1898 military experience battling ferocious Islamic “extremism” in India.

The pre-1940 normative order was so strong that, even during the 24 May-3 June evacuation of Dunkirk, Halifax still saw Hitler in the gentlemanly light and repeatedly proposed seeking peace terms with him. Such gentlemanly views had rationalised each invasion: the 1936 occupation of the Rhineland was to overcome the humiliation of the Versailles Treaty; the 1938 Anschluss with Austria and occupation of Sudetenland was to enforce the rights of Germans residing there; the 1939 taking of Czechoslovakia was for the sake of order; the 1939 invasion of Poland was obfuscated since England had to declare a war it could not then fight. Halifax wanted to do the same for the April 1940 invasion of Denmark and Norway.

Mild moral risk to destabilize the normative order

After Norway, the Tories realized that appeasement would no longer work politically and made Churchill Prime Minister. In his first (May 13, 1940) speech to the Commons after becoming PM, Churchill spoke famously with norm-breaking fire that questioned the high-minded normative order.

“I have nothing to offer but blood, toil, tears, and sweat. . . . You ask, What is our policy? I will say: It is to wage war, by sea, land, and air, with all our might and with all the strength that God can give us: to wage war against a monstrous tyranny, never surpassed in the dark, lamentable catalogue of human crime. . . . You ask, What is our aim? I can answer in one word: Victory—victory at all costs, victory in spite of terror; victory, however long and hard the road may be; for without victory there is no survival. “ (1959, p. 245)

Clearly, this speech called on the grounding norm of British fierceness and depended on that for its sense. Such a speech would seem insane to a mild people. But it also called for new clarifying and organising norms. Although Churchill did not name them then – brutal realism and ruthlessness, respectively – he enacted them. On 12 May, he authorized RAF bomber attacks on “railways and oil refineries in the Ruhr and other military objectives east of the Rhine”. One foreign secretary was prescient enough to write “total war begins”, but few saw this. Churchill had regularly to repeat to his War Cabinet that the only safe way to negotiate with Hitler was to “convince Hitler he couldn’t beat us”. For that to happen, a new normative order had to arise.

First Shocking Moral Risk

Churchill’s first major moral-risk-taking came on May 26, 1940. The German Army was pushing a little over 300,000 British troops – the heart of the British army – to Dunkirk and with superior air power and tanks wanted to finish the Army off. The British had a plan for the evacuation of Dunkirk by using small, private boats from the British ports across the channel. However, the evacuation would take a few days, and the German units were closing in fast. Churchill realised that he had about 4,000 soldiers at Calais. So, to delay the German forces, he ordered the troops in Calais to stop the German army. The attack started on May 24, 1940. On 26 May, Churchill took the risk.

“I now resolved that Calais should be fought to the death, and that no evacuation by sea could be allowed to the garrison. . . . It was painful thus to sacrifice these splendid, trained troops, of which we had so few, for the doubtful advantage of gaining two or perhaps three days, and the unknown uses that could be made of these days. . . . The final decision not to relieve the garrison was taken on the evening of May 26. . . . Eden and Ironside were with me. . . . We three came out from dinner and at 9 P.M. did the deed. . . . I could not help feeling physically sick as we afterwards sat silent at the table.”

From then on, neither Churchill nor his generals could walk away morally unscathed; such sacrifice could only be justified by victory. Though Churchill saw the sacrifice of troops at Calais as the crux, the lucky deliverance of the troops from Dunkirk and Churchill’s “We shall never surrender” speech covered over the new norms of brutal realism and ruthlessness. The elites still hoped that, with the coming fall of France, Halifax would replace Churchill and secure a negotiated peace with Hitler. Even today we still tend to see the evacuation of Dunkirk in morally heroic terms; however, the risk of failure was huge. Churchill had a decade earlier sacrificed troops at Gallipoli, failed, resigned from office, and became reviled for years as an immoral, murderous maniac who loved war more than his soldier’s lives. Had he failed at Dunkirk, he would certainly have been reviled for sacrificing Calais troops, whom he could have evacuated successfully. He likely would have had to resign or give up running the war.

Second shocking, articulable moral risk

The elite’s worries that France would fall and leave Britain alone in the war against Germany came true when Marshal Pétain signed the Armistice with Germany on June 22, 1940. Though the Armistice called for demobilisation of the French fleet, Churchill did not trust Hitler to do so because combining the French fleet with the German and Italian fleets would threaten the British naval advantage. Churchill had then to contemplate what Roberts calls “one of the most ruthless attacks on an erstwhile ally in the history of modern warfare”: bombing the French fleet. Churchill called this decision “a hateful decision, the most unnatural and painful in which I have ever been concerned…. It was a Greek tragedy”. He thought full-scale war with France was likely. The act was shocking.

Most of the French fleet was at Oran and Alexandria. On July 2, 1940, Churchill and the War Council instructed Admiral Somerville to offer the French several choices: sail to the French West Indies or British ports; sink the ships; or be sunk. At Oran, where most of the French fleet lay, the French Admiral Gensoul hesitated. Churchill recounts:

“The distress of the British Admiral and his principal officers was evident to us from the signals which had passed. Nothing but the most direct orders compelled them to open fire on those who had been so lately their comrades. . . . A final signal was dispatched at 6:26 PM: French ships must comply with our terms or sink themselves or be sunk by you before dark.”

At Oran, the bombardment lasted 10 min and killed 1,297 French sailors. At Alexandria, the French gave in.

When Churchill reported the destruction of the French fleet to Parliament, he did not give an inspirational speech. He gloomily delivered his “sad duty.” As he spoke, “there were audible gasps of surprise”. This report succeeded where his much more memorable Dunkirk speech failed. Churchill wrote:

“The House was very silent during the recital, but at the end there occurred a scene unique in my own experience. Everyone seemed to stand up all around, cheering, for what seemed a long time. Up till this moment the Conservative Party had treated me with some reserve. . . . But now all joined in solemn stentorian accord.”

Churchill summed up the new normative order:

“The elimination of the French Navy as an important factor almost at a single stroke by violent action produced a profound impression in every country… It was made plain that the British War Cabinet feared nothing and would stop at nothing.”

All who applauded either in the House or in their hearts were complicit. To beat the Nazi war machine, brutal realism and ruthlessness were needed. The new normative order emerged. The grounding norms were the same: buoyancy, industriousness, and, since they were under attack, fierceness. The clarifying norm, however, was new: brutal realism. Brutal realism alone would suggest defeat, but with buoyancy and fierceness grounding it and a new organising norm – ruthlessness, “victory at any cost” – Britain could wage war effectively.

Churchill was clear about the leader’s role in moral-risk-taking:

“[A]ll the responsibility was [to be] laid upon the five War Cabinet Ministers. They were the only ones who had the right to have their heads cut off on Tower Hill if we did not win.”

Figure 2 illustrates the change of norms. In sum, captivated by the anomaly of Hitler, Churchill questioned the old normative order of buoyancy (grounding norm), gentlemanliness (clarifying norm), and appeasement (organising norm) by insisting on the danger of Hitler and calling for victory at any cost, which would eventually become the new organising norm. Then, driven by danger, he took the shocking risk of sacrificing his own and, later, allied troops at Calais and Oran, respectively, thus manifesting an already-existing grounding fierceness, while bringing forth a new clarifying brutal realism and ruthlessness as the organising norm. This constituted the new normative order that became embodied in Mutually Assured Destruction, which Churchill formulated, and lasted through to the end of the cold war. Although Churchill is one of the world’s greatest orators, his non-rationally justifiable morally risky actions (and not his speeches) produced the “Oh, my God; this is what we face and have to do” moral paradigm shift.

Fig. 2

How Churchill changed the British normative order in WWII

Some scholars (see source material) account for Churchill’s moral risks with Carl Schmitt’s legal State of Exception or the Dirty Hands argument (Walzer). In these cases, the leader engages in an immoral action to preserve the community and return it to its antecedent norms or to the norms it had already aspired to. Insofar as this is the case, the State of Exception and Dirty Hands accounts are not accounts of radically changing normative orders. Actions under those doctrines are justified by the value of the current norms and the promises of preservation and return. Our argument, rather, focuses on how leaders institute new norms and normative orders.

Roddick and the founding of The Body Shop

In drawing on Churchill, we have admittedly chosen an “extreme case” of a leader who radically changed norms by taking extraordinary moral risks. Our argument, however, is more general and can be extended to cases of business leaders who take, in comparison, milder moral risks in the face of anomalies and thereby shift the normative orders of their organisations and industries. Michaelson’s exploration of Vagelos’s moral-risk-taking of devoting Merck’s resources to curing river blindness even though Merck would not recover the cost from those afflicted, is a good example.

The main difference between extreme cases and ordinary business cases is that business leaders frequently turn already well-understood prudential norms (wise to follow where possible) into moral norms (required to follow) and, therefore, can make the change in the normative order by taking one shocking, articulable moral risk. Thus, their moral risks tend to be milder than the risks taken by political leaders in war. We illustrate this below with the case of Anita Roddick.

Anita Roddick’s main moral-risk-taking action was, like Gauguin’s, a personal betrayal, which she committed for the sake of establishing growth as the organising norm of The Body Shop. According to Roddick, the 1970s “beauty business” had a normative order consisting of a grounding norm of encouraging women to seek physical attractiveness, a clarifying norm of seeing beauty as youthful, and an organising norm of inspiring women to “feel dissatisfied with their bodies” and making “miracle claims” of enabling youthfulness (Roddick, 1991, pp. 9, 15–16).

In the 1970s, women became more autonomous, and the science of aging made the industry’s self-interested propaganda transparent. However, the growing awareness did not drive change in the normative order, and that anomaly captivated Roddick. In response to it, she discovered that women all over the world had for centuries been caring for their skin using natural ingredients. She wanted to draw on that knowledge to end the beauty business regime of deceit. She wanted to make purchasing beauty products an act of fun, compassion, and frugality. That became her clarifying norm. Her grounding norm: women knowledgeably taking care of their bodies. The new organising norm was that of a caring, small-is-beautiful, family business.

As Roddick started to succeed, she encountered a new anomaly: the beauty business could happily give her a small-is-beautiful niche and not change at all. To shift the norms of the industry, The Body Shop had to adopt the organising norm of a high-growth enterprise. She took the mild moral risk of raising the issue with Gordon, her husband and also her business partner, and family. They got her point but held firm to a family business norm – no expansion. So, when her husband was out of town, she took the shocking moral risk of selling half the company to Ian McGlinn for £4,000 to open a new store. Her betrayal of Gordon’s trust was both immoral and compounded by treating the family business with fiduciary irresponsibility. However, she had two-fold moral luck. When Gordon returned, he adopted her vision of growth, forgave her, and became her “rock.” Had the second shop failed, the forgiveness would likely not have lasted. With growth, a sense of complicity would increase. From 1976 to 1990, The Body Shop grew from one shop to 600 and had the largest overseas presence of any British retailer.

Roddick’s moral risk-taking replaced the normative order of The Body Shop itself and displaced the industry’s normative order with a clarifying norm of compassionate, frugal fun in purchasing, grounded in a norm of knowledgeably taking care of oneself with both growth and valuing women’s autonomy as the organising norm. Many see Roddick’s order as better than that of the old beauty business; Sephora as well as many boutiques dwell in Roddick’s order. (Virtually all imitate Roddick’s stand on animal testing.) Figure 3 illustrates how Roddick took moral risks to change the normative orders of The Body Shop and the beauty business.

Fig. 3

How Roddick changed the normative order of The Body Shop and the beauty industry

Discussion

In this paper, we explored the leader-driven, radical change of norms through morally risky actions. We focused on this topic since moral entrepreneurship theory, the practice-based theory, and institutional theory have conceived of leader-driven moral change primarily through rational persuasion. We have argued that leaders, embedded in their grounding, clarifying, and organising norms, get captivated by anomalies and respond to them by taking moral risks, which, if practically successful, will create a new normative order. In this section, we discuss (a) the nature of norm change, (b) how our framework applies to leaders more modest than Churchill or Roddick, and (c) the question that frequently arises with moral-risk-taking: how do moral-risk-taking leaders avoid evil? Finally, we summarise our contribution and offer suggestions for further research.

Moral norms change

We have shown how formal leaders successfully break and thereby create moral norms. Does this mean that the moral norms change forever? No, we make no such claim. Viewed from a Heidegger-influenced, process perspective, a normative order is both enduring and malleable; it provides continuity and marks out a collective entity, while, at the same time, it shapes the unfolding of self-reflective agency in open-ended contexts and thus is susceptible to change. Viewing a particular normative order as an ongoing accomplishment preserves the possibility of future change. Under this view, the past may be subtly, selectively, and contextually appropriated in the present. Although moral norms are relatively enduring, “they are not fixed or mechanically connected to action”. Relatedly, research on organisational identity change shows how the latter is open-endedly reconstructed through leaders drawing on symbolic resources to generate experiences that bring about new memories. In the same way, a normative order is both self-referentially defined and self-reflectively enacted and, thus, “fluid, in flux, and arguably unstable”. Within a normative order, the seeds of a new one are usually sown, although its precise future form cannot be known in advance.

Ordinary leaders

Admittedly, leaders like Churchill and Roddick are hard acts to follow. Does our framework apply to more ordinary leaders, those who are humble enough to know that, although they may not be Churchill and Roddick, they are nonetheless determined to become good leaders? It does. Ordinary leaders face anomalies requiring moral risk-taking, whenever they make improvements that require changes in their organisational cultures. As relevant studies show (see source material) the ordinary leader, seeking to change an organisational culture will likely face an anomalous resistance frequently from the most loyal and, thus, will need to take a moral risk (such as a public termination seen as a betrayal) to overcome the resistance. Krantz (see source material) relevantly notes that “betrayal in the service of a higher purpose is inherent in organisational life and deeply linked to the capacity to lead”. Thus, one does not have to be King Agamemnon to face moral conflict (Nussbaum). One does not have to be Othello to experience self-inflicted tragedy (March & Weil). Tragic, dramatic cases remind us of what “we know and do not acknowledge” (Williams) we live in a world of betrayal, hubris, and sacrifice, and such acts are important in making leadership decisions (Contu).

Moral risk-taking and avoiding harm or evil

Taking any moral risk means potentially doing harm or evil. A leader’s moral risks are less casual and on a larger scale than individuals’; hence the consequences are greater. The leader takes leaderly moral risks to address an anomaly whose solution resists sensible actions from within the prevailing normative order. As the leader acknowledges, explores, and describes the anomaly and even takes a mild moral risk, the leader opens herself up to alternative solutions. If the leader is facing a genuine anomaly, the unworkable solutions offered will all come from the mindset of the prevailing order. Only then, knowing she faces a true anomaly which therefore demands challenging the prevailing moral order, does the leader take the shocking moral risk with the reasonable belief that if the action practically overcomes the anomaly, it will establish a new norm.

If the action fails practically, the leader will likely be deemed reckless, immoral, or, at worst, evil. Remember Churchill’s Gallipoli. However, even if the action succeeds, it might still be too repulsive to itself become a new moral norm, though it ends the rule of the older moral norm and becomes prudential. Though Churchill encouraged civilian bombing, he regularly worried about whether the bombing was going too far: “Are we beasts?” (Roberts, 2018, pp. 588, 780).

Nurturing and responding to that constant worry of going too far is probably the best prevention of evil. Kierkegaard concluded similarly. In thinking about the evil made possible with “the teleological suspension of the ethical” (the religious form of moral-risk-taking), Kierkegaard expected that his knights of faith would experience “fear and trembling” at the unethical content of their acts. Churchill’s physical sickness after the Calais order would count as such.

If the danger of doing evil is so substantial, why would leaders take moral risks at all? We seek more research on this topic but can give a preliminary answer. Courageous, morally sensitive leaders face moral anomalies that require shifting whole normative orders. As such, even their closest sympathizers (Churchill’s fellow party members, Roddick’s husband) will likely find the solutions proposed extreme. They might support the leaders’ actions as necessary in the current special circumstances, but their justifications resist the normative change. To succeed, the leader must eliminate that resistance. Frequently, people need to see the successful bold actions of the new order replacing or displacing the old to have the gestalt-switch “oh my God” moment that lets them know they are living in a new order.

We will likely have better leaders if they understand that they will be called on to take moral risks and if they constantly try to develop conscience, practical wisdom, and what Rowan Williams, the former Archbishop of Canterbury, aptly calls “a tragic imagination”.

“The tragic imagination,” he notes, “insists that we remain alert to the possibility that we are already incubating seeds of destruction; that our habitual discourse with ourselves as well as with others may already have set us on a path that will consume us”. How these qualities may be pedagogically developed is beyond the scope of the present paper, but it would be a fascinating line of further research to explore. Of course, there cannot be any guarantees that moral failure will not come about when taking moral risk. What is critical is that leaders ask themselves, and are asked by others to whom governance is entrusted, the perennial Socratic question: am I (or are we) doing the right thing?

Contributions and further research

We have made two contributions. Firstly, since there has been a paucity of research on non-rationally justifiable, leader-driven, radical norm change, our paper helps redress the balance. We focused particularly on the two most typical moral risks leaders undertake in the face of moral anomalies: mild moral risks to question the current normative order and larger moral risks that treat shocking acts as morally imperative. If the actions are practically successful, a new normative order emerges. This finding contributes directly to moral entrepreneurship theory. We also specified the mechanism through which followers change their norms: their felt complicity in their leader’s moral-risk-taking. To the extent that moral risks pay off, followers feel vindicated, and the morally shocking act (or a variant) receives a new, high moral evaluation and becomes a norm.

Secondly, we enrich current perspectives on leadership, its embedding in practices, and radical norm change. While acknowledging the contribution of research on practice-based leadership, its incrementalism leaves little room for revealing a radical change of a normative order. Similarly, institutionalist research sees leaders, embedded in organisational practices, as primarily articulating, preserving, and adapting current norms or, in moral entrepreneurship literature, introducing new, aligned norms to fill moral voids. By adopting a thick conception of practice and embedded agency, we eschewed methodological individualism and explored how leaders, embedded in grounding and clarifying practices, are drawn to become captivated by anomalies and take a moral risk, that cannot be accounted for by rational persuasion, to enact new norms and normative orders.

Finally, though our framework offers researchers widely employable conceptual tools for examining leaders’ non-rationally persuasive engagement in changing norms and doing so without resorting to methodological individualism, the main limitation of this article is that we have not examined potentially disconfirming cases: ones where leaders take shocking moral risks, succeed, and do not establish new normative orders. So our first suggestion is to use the framework to examine leaders’ moral-risk-taking or non-rationally persuasive change of normative orders and uncover disconfirming cases if there are any. Secondly, our work suggests we could achieve a clearer picture of such change if researchers identify past and current moral anomalies and leaders’ responses. Thirdly, we open the questions of how leaders can mitigate their moral risk, how they can recover from failed moral-risk-taking, and how they fail to recover. Fourthly, future research may identify different kinds of moral-risk-taking, undertaken in different types of organisations, and how leaders handle them. Fifthly, our account invites the question of what happens when leaders eschew moral-risk-taking. What are the consequences? Finally, our work encourages scholars to explore how leaders can best develop the practical wisdom and moral or tragic imagination to develop new norms and avoid evil.